The prevalence of user-generated content (UGC) on social media and other digital platforms has increased more than ever. How can’t it be when there are 5.3 billion global internet users? Keeping track of the various content generated would take a lot of work.

This constant growth of UGC shapes how people express themselves, engage with others, and share their thoughts, ideas, and creativity online. Many individuals would share anything that comes to mind and influence others, either negatively or positively. This is why UGC content moderation is essential.

You may ask, what is UGC moderation? And how do content moderation services play a crucial role in keeping a brighter, safer future for online communities?

User-generated content is the content individuals voluntarily create, produce, and share across various platforms. UGC is any type of content, such as images, videos, reels, vlogs, blogs, product reviews, social media posts, and articles.

Meanwhile, UGC moderation involves reviewing, managing, and assessing the content users create online, ensuring they comply with community rules and guidelines. It is a content moderation service that monitors UGC for appropriateness, relevance, and adherence to digital platform standards.

The fact that 510,000 comments are shared on Facebook and 16,000 videos are posted on TikTok every single minute emphasizes the importance of UGC content moderation. Effective content moderation goes a long way in maintaining a positive and enjoyable online environment.

The Current State of UGC Content Moderation

UGC proves its significance by empowering individuals to share diverse perspectives and adding dynamic content to various online platforms. Its richness reflects real-life experiences, sparks inspiration, and builds genuine connections between and among users.

The abundance of UGC contributes to the platform's overall content diversity and provides users with a wide range of information and entertainment. This way, they engage with people whose preferences and interests align with theirs, fostering a sense of inclusivity and belongingness.

Moreover, UGC is a powerful marketing tool for businesses to maximize users' experiences with products and services. Customer feedback is one of the perfect examples in this case. They may submit their feedback through product recommendations and reviews, which can impact consumer behavior and the businesses' credibility.

While digital platforms, like social media, allow individuals to exercise their creative freedom, these also present the following challenges that demand the intervention of UGC content moderation:

- Spread of Misinformation

Unmoderated UGC can lead to the rapid dissemination of manipulated and inaccurate information. Users might consume and believe false content and make choices based on misleading information.

Additionally, overlooked content can make people doubt if what they read is true. This makes it hard to trust the information online and impacts how they rely on the website or platform.

- Cyberbullying and Harassment

Without effective moderation, online spaces become breeding grounds for cyberbullying and hate speech, affecting the mental well-being of the affected users.

- Privacy Concerns

Unregulated UGC may expose, exploit, and misuse personal and sensitive information, putting many individuals at risk.

- Inappropriate Content

Inappropriate, offensive, and disturbing content can make users, especially younger audiences, feel uncomfortable and unsafe.

To effectively and efficiently address these challenges, let’s delve into the innovations and emerging trends in UGC moderation that we can look forward to.

Innovations in UGC Content Moderation

Artificial Intelligence (AI) and Machine Learning (ML) Advancements

Using AI for real-time content analysis is an innovative approach to UGC content moderation. AI algorithms are designed to analyze UGC and quickly identify inappropriate or harmful ones. It enhances the efficiency of content moderation and can immediately adapt to evolving trends and challenges.

Meanwhile, machine learning (ML) is valuable for its nuanced understanding of moderating content. It is a subset of AI that leverages deep learning techniques to comprehend the context, tone, and subtle nuances within UGC. ML advancements enable a more refined and accurate approach as they distinguish harmful content from those that aren't.

AI and ML help speed up the content moderation process and minimize the risk of false positives—balancing freedom of expression and a safe online environment. Hence, marking a step forward in simplifying, identifying, and addressing the complex challenges of moderating various UGCs.

Natural Language Processing (NLP) Developments

Natural Language Processing (NLP) is a field of AI that focuses on understanding, interpreting, and generating human language. NLP is one of the vital innovations of UGC content moderation because:

- It can process a significant volume of natural language data, such as texts, images, and videos.

- It can analyze the sentiment conveyed in text or speech, determining whether the expressed emotions are positive, negative, or neutral.

- It can also identify language cues that indicate sarcasm or irony.

- It facilitates contextual understanding and automatic content translation.

- It allows moderators to comprehend and evaluate UGC in different languages. This is crucial for businesses and online platforms with a global user base.

Ethical Considerations and User Privacy

Users these days are much more cautious when providing personal information. Businesses are expected to uphold data privacy and protection, even while moderating content.

They must respect users’ choices about the extent of information they are comfortable sharing. This way, platforms can gain valuable insights about user interests without compromising personal details.

Additionally, platforms should regularly update users on privacy measures. This maintains transparency in handling user data during content moderation.

Here are some ways platforms can embody objectivity in AI-driven content moderation:

- Diverse Training Data

Ensure the AI model learns from and trains on a diverse dataset with various characteristics, perspectives, and demographics. This training helps the AI model to understand and fairly assess content from multiple sources.

- Inclusive Teams

Form inclusive teams responsible for developing and maintaining AI-driven moderation systems. Diverse viewpoints help identify and address potential biases during the moderation process.

- Explainability and Transparency

Users deserve a well-constructed explanation of how the AI model makes decisions. Being transparent to users helps them understand the reasons and criteria behind actions taken by the system.

- Continuous Monitoring and Regular Audits

Implement ongoing evaluations to identify any patterns of unfairness and adjust the model accordingly.

Also, conduct regular audits to assess the performance of the AI-driven moderation system. This external review upholds objectivity in evaluating the system.

Emerging Trends in UGC Content Moderation

Image and Video Recognition Technologies

These refer to the advanced UGC content moderation tools and systems that automatically detect, analyze, and moderate visual content. Besides these, they can even identify sentiments found in visual content—images and videos—and flag inappropriate or irrelevant ones.

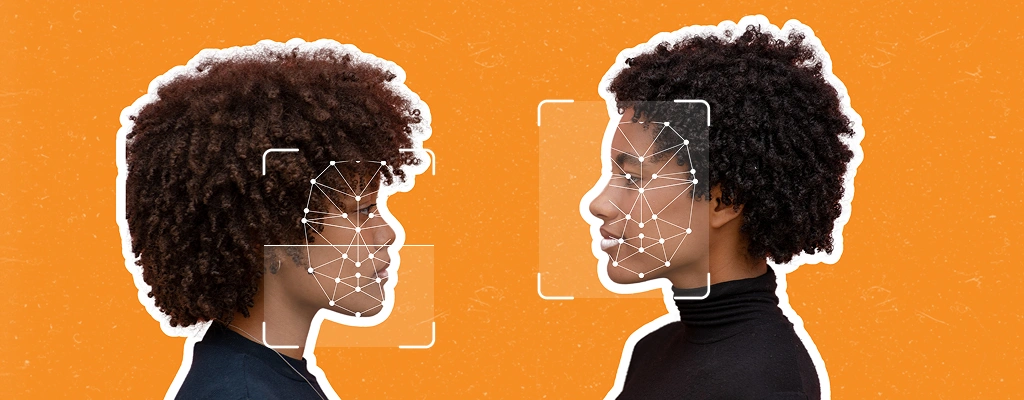

Speaking of visual content, there is a rampant deceptive practice called “deepfake.” It is a type of manipulated media where a person's likeness is convincingly similar to someone else's face or body. Deepfakes are so realistic-looking that it can be challenging to distinguish what is real and not.

That said, seeing is not always believing. The following are a few ways platforms can prevent the spread of deepfakes and maintain the integrity of UGC:

- Implement advanced facial recognition technology that analyzes facial expressions.

- Use AI algorithms for anomaly detection, such as unusual patterns in facial movements, lighting, or context.

- Examine metadata, which includes details like the publication date, location, and editing history.

- Establish a comparison mechanism. You can use image search engines like Google Image Search to identify disparities between the original version and any manipulated sources.

By incorporating image and video recognition technologies, platforms can improve their ability to efficiently and accurately assess a vast amount of visual content. These technologies help them ensure that UGC aligns with community guidelines. This trend reflects a proactive approach to keeping a safe online community and leveraging the power of automation in analyzing multimedia content.

Integration of Blockchain Technology

The advancement of blockchain technology is one of the trends shaping UGC's future. Its decentralized nature minimizes the risk of biased content and contributes to fairness in UGC. Here’s how blockchain proves its significance to content moderation:

- Transparency

Transparency is ensured by creating a tamper-proof record of content moderation decisions. Every decision in the moderation process is securely recorded to provide a clear and transparent history of content-related activities.

- Traceability

Blockchain also enables the tracking of content from creation to moderation. This traceability helps verify the authenticity and origin of visual content—images or videos—via traceable and unchangeable records. Hence, it reduces the risk of unauthorized access and enhances the overall integrity of UGC.

Collaborative Moderation Models

Another UGC moderation trend to look forward to is the collaborative efforts brought by human moderation, AI moderation, and users.

Human moderators use their judgment and discernment to understand cultural nuances, while automated content moderation contributes to efficiency and consistency. Hence, this powerful duo leads to a balanced and effective content moderation process.

Additionally, involving users in the moderation process is crucial. User reports can serve as valuable inputs for identifying and preventing the spread of inappropriate content. So, encourage users to be mindful as they check and assess the relevance of any content they come across online.

This trend reflects a shift toward a more transparent, participatory approach to managing UGC. Aside from being active users of a particular platform, users also become responsible and vigilant protectors of the digital space.

Flexibility and Customization in Moderation Settings

The flexibility in moderation settings helps platforms quickly adapt to dynamic user behaviors and emerging content challenges. This flexibility is the stepping stone to achieving user satisfaction and shows the platforms’ responsiveness to the ever-changing interests of users.

Of course, personalization allows users to tailor their own content viewing experiences. With customization, individuals can set preferences for the type of content they want to see, empowering them to have greater control over their digital interactions. This way, they engage with content that aligns with their interests and values.

Future Challenges and Considerations

Although optimism is essential, anticipating obstacles in UGC content moderation is still necessary. Knowing the future challenges and proactively preparing for them ensures a more resilient and effective UGC moderation.

- Advanced Deepfakes and AI Manipulation

Platforms should develop advanced AI tools capable of detecting and verifying manipulated media. Conducting user education on recognizing potential misinformation would also be a good idea.

- Evolving Hate Speech and Toxic Behavior

Platforms should implement AI-driven sentiment analysis and use NLP to detect nuanced forms of hate speech, mitigating toxicity in the digital space.

- Privacy Concerns in Moderation

Platforms must utilize privacy-preserving AI techniques, continuously practice anonymization, clearly communicate with users, and explain how they collect and handle their data during moderation.

- User-Generated Disinformation

Platforms must incorporate AI algorithms for real-time fact-checking, collaborate with information validation groups, educate users about media literacy, and encourage individuals to use their critical thinking skills.

Ensure Creative, Positive, and Relevant UGC with Chekkee!

Overall, platforms can create a trustworthy online environment when they know and implement the innovations and emerging trends of UGC content moderation. Integrating technological advancements goes a long way in boosting the efficiency and effectiveness of content moderation processes.

Moreover, observing ethical considerations while preparing for future challenges ensures a safe, positive, and enjoyable digital community. This way, platforms can attain and maintain the integrity of content moderation practices.

So, to create a remarkable online environment, consider outsourcing content moderation services from Chekkee!

Chekkee is a flexible content moderation company that combines the expertise of human and AI content moderation. We offer robust and effective content moderation solutions tailored to your business needs.

Our experienced content moderators strive to look after each piece of content and ensure it is creative, relevant, and within community guidelines.

Create a safe space while staying ahead of the game! Contact us for more info.